This is why you need a solid infrastructure to be agent-ready in 2025

Before Microsoft 365 Copilot Agents can deliver real value, the foundation must be solid: clean data, proper permissions, and a reliable infrastructure. This guide explains why data quality determines AI success, highlights risks like oversharing and silos, and outlines 10 practical steps to make your M365 environment agent-ready—secure, compliant, and scalable.

Prologue

With this omnipresence, many ideas and the desire to take action or at least experiment arise. At glueckkanja AG, we support our customers throughout this process. Of course, we are already developing and building agents, but in 80% of our projects, the primary focus is on preparing the data and tenant for agent creation. Before you implement Copilot productively in your organization, it's worthwhile to take a critical look at your infrastructure. When making decisions in this area, there are several important aspects to understand before deploying AI agents on a large scale. That’s why, in this blog post, I will guide you through the essential steps and differences. In a time when AI assistants like Microsoft 365 Copilot Agents promise to transform the working world, one principle holds true above all: AI is only as good as the system beneath it.

This comprehensive guide outlines how to prepare your data and infrastructure for Copilot Agents, covering key practices in SharePoint, Teams, and the Power Platform.

Why your infrastructure (data) matters

As we utilize AI agents, it is imperative to understand that these agents do not inherently possess knowledge about our organization, our data, or our unique operational context. By default, an AI agent only carries the built-in knowledge derived from the training of the Large Language Model (LLM). To effectively enhance and extend the capabilities of these AI agents, it is essential to systematically integrate various components. This enhancement can be achieved through the implementation of System Prompts, Knowledge Bases, Connectors, Web-Search functionalities, access to Microsoft Graph, Semantic Search, and additional tools. These components collectively enable the AI agents to deliver more precise, contextually relevant responses and actions, aligning closely with the specific needs and data of the organization. Since we are now in the very beginning of the agentic area, many of us will start with simple agents that source information based on existing SharePoint Online libraries.

For us in IT, that means we need to take care about our data in SharePoint Online more than ever!

SharePoint Online = Knowledge = Data and Data = Key

My clear message: Before adding AI copilots to your organization, get your data house in order. The same data that feeds your Copilot Agents also feeds Microsoft 365 Copilot itself.

And not only that! Microsoft 365 Copilot is assessing the same data. *If that data is cluttered, overshared, or poorly secured, the AI could surface incorrect or sensitive information unexpectedly *or example, imagine asking Copilot about company structure and receiving details of a confidential reorganization plan you weren’t meant to see. Such incidents occur when content is overshared (available too broadly) on platforms like SharePoint or Teams. Note: Copilot respects all existing permissions, that means something like only can happen when permissions are misconfigured. Conversely, if data is siloed or inaccessible, AI assistants will be less useful.

Copilot only surfaces organizational data that the individual user has at least view permissions for!

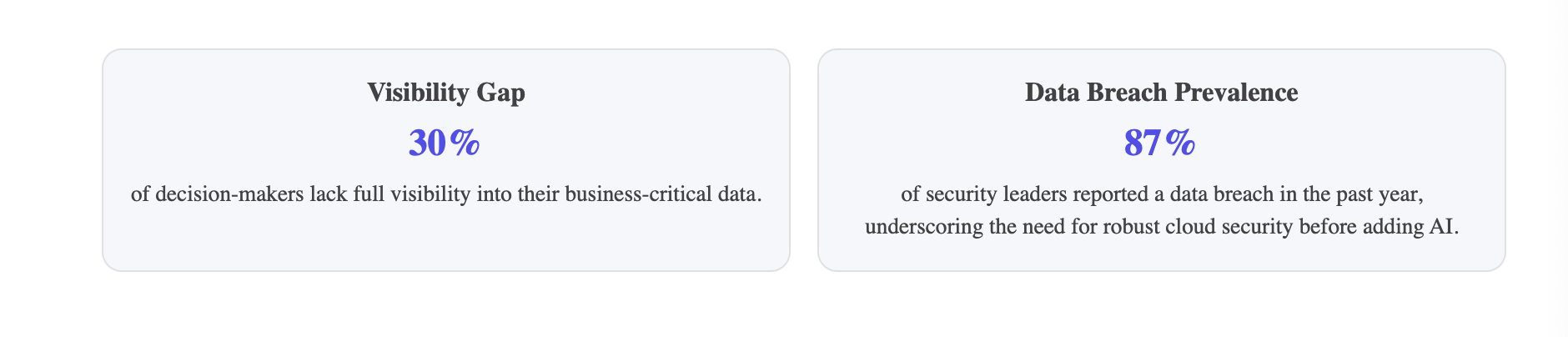

Key takeaway: Enterprise AI succeeds only with a solid data foundation. A recent Microsoft report identifies data oversharing, data leakage, and noncompliant usage as top challenges to address before deploying AI. Organizations that invest in preparation of SharePoint Online and other data sources, will unlock Copilot’s benefits with confidence, while those who don’t risk security breaches or irrelevant AI outputs. Studies show about one-third of decision-makers lack full visibility into critical data.

Sources: https://www.fortanix.com/company/pr/2025/02/fortanix-releases-2025-genai-data-security-report

Sources: https://www.fortanix.com/company/pr/2025/02/fortanix-releases-2025-genai-data-security-report

Verify tenant-wide settings that could lead to oversharing. For example, scrutinize default link sharing policies (e.g. if “Anyone with the link” or “People in your organization” is allowed by default for SharePoint/OneDrive), whether users can create public Teams by default, and if your Power Platform environment is open without governance. Misconfigured defaults here are a common cause of unintentional broad access.

Verify tenant-wide settings that could lead to oversharing. For example, scrutinize default link sharing policies (e.g. if “Anyone with the link” or “People in your organization” is allowed by default for SharePoint/OneDrive), whether users can create public Teams by default, and if your Power Platform environment is open without governance. Misconfigured defaults here are a common cause of unintentional broad access.

10 steps to improve your M365 data infrastructure now

Now we know your agents will need data. As we as glueckkanja step in these projects, this is our typical 10-point list that we work from the top to end with our customers.

Step 1: Check Core Sharing Settings

Verify tenant-wide settings that could lead to oversharing. For example, scrutinize default link sharing policies (e.g. if “Anyone with the link” or “People in your organization” is allowed by default for SharePoint/OneDrive), whether users can create public Teams by default, and if your Power Platform environment is open without governance. Misconfigured defaults here are a common cause of unintentional broad access..

Step 2: Audit Public Teams

Review any Microsoft Teams marked as “Public.” A public Team means anyone in your organization can discover and access its content. Ensure that any Team set to public truly contains only non-sensitive, broadly suitable content. If not, switch it to private or adjust membership. (It’s easy for a Team to be created as Public and later forgotten, exposing files to all employees.)

Step 3: Review Graph Connectors

Check if your tenant has any Microsoft Graph Connectors set up that pull in third-party data (e.g. from external file systems, wikis, etc.). Remove or secure any connector that indexes data not everyone should see. Why? Content indexed via Graph Connectors becomes part of your Microsoft Graph search index – meaning Copilot can potentially use it to answer prompts. You only want relevant, intended data sources connected.

Step 4: Generate a SharePoint Online Baseline Report

SPO has different possible risks for unwanted data in Agents and Copilot. You need to look for different key metrics:

- Broken Permission Inheritance on a folder-level

- Public SharePoint Sites

- Use of "Everyone Except External Users" or other dynamic group that contain all users

- Anyone Sharing Links

- Everyone-in-my-org Sharing Links

- Unwanted people in the Site Admins / Owners / Members / Visitors Group

Step 5: Categorize and Prioritize Risks

Take the findings from Steps 1–4 and rank them by severity. Which sites or files carry the most business-critical or sensitive data and also have exposure risks? Prioritize fixing those. By layering business context (e.g., a site with financial data vs. a site with generic templates), you can focus on the most impactful issues first.

Step 6: Involve Site Owners for Access Reviews

For each SharePoint site (or Team) highlighted as risky, have the site owner double-check who has access and if that is appropriate. Owners are typically closest to the content and can quickly spot “Oh, why does Everyone have read access to this? That shouldn’t be.” Implement a process where site admins certify permissions regularly.

Step 7: Establish Ongoing Oversight

Put in place a continuous monitoring process for new oversharing issues. Oversharing control isn’t a one-time fix; as new sites, Teams, and files get created, you need to catch misconfigurations proactively. Consider using Microsoft Purview’s reports or alerts to catch things like files shared externally or to huge groups, new public teams created, etc. Microsoft’s tools can automate alerts for these conditions, so make use of them to maintain a strong posture.

Step 8: Apply Sensitivity Labels and DLP Policies

Use Microsoft Purview Sensitivity Labels to classify data (Confidential, Highly Confidential, etc.) and bind those labels to protection settings. For instance, a “Confidential” label can encrypt files or prevent external sharing. Also configure Data Loss Prevention (DLP) policies to prevent or monitor oversharing of sensitive info (like blocking someone from emailing a list of customer SSNs). These tools not only prevent accidental leaks in day-to-day use, they also work with Copilot: if Copilot tries to access or output labeled content in ways it shouldn’t, DLP can intervene. Moreover, Copilot itself will carry forward the document’s label to its responses, as noted later.

Step 9: Implement Power Platform Governance

Extend your oversight to the Power Platform (Power Apps, Power Automate, etc.). Define DLP policies for Power Platform to control connectors (so someone can’t, say, make a flow that pulls data from a sensitive SharePoint list and posts it to an external service). Also consider having multiple environments (Dev/Test/Prod) with proper security so that “Citizen Developers” building agents or apps don’t inadvertently expose data. Essentially, prevent the Power Platform from becoming an ungoverned backdoor to your data.

Step 10: Educate and Enable Your Agent Builders

Finally, create guidelines and best practices for those who will be building or deploying AI agents (whether they are pro developers or business users). Establish training on handling data safely: e.g., how to choose appropriate knowledge sources for an agent, why not to include sensitive files in a broadly shared agent, how to test an agent’s output for any unexpected info. By fostering a data-aware culture among “agent makers,” you reduce the chance of someone inadvertently exposing information when designing an AI solution.

Sources:

- https://techcommunity.microsoft.com/blog/microsoft365copilotblog/from-oversharing-to-optimization-deploying-microsoft-365-copilot-with-confidence/4357963

- https://techcommunity.microsoft.com/blog/microsoft365copilotblog/microsoft-graph-connectors-update-expand-copilot%E2%80%99s-knowledge-with-50-million-ite/4243648

After you have completed these steps, you can now securely go on and start building productive agents.* To build agents, we have different platforms and features from Microsoft that we can rely on for. You'll find the most prominent examples in the next chapter. If you need help with this list, feel free to reach out to us so we can help you with this important preparation exercise.

Nothing prevents you in the meanwhile to create PoC or Test-Agents with sample data, manually uploaded files or specific data attached via RAG. But we recommend these steps before a larger implementation / rollout of agents.

Understanding differences between Agent Platforms

Step 1: Understand your Agent-Creators

After the foundation work to prepare the data, we need to understand which platforms are available to create those agents. We try to differentiate these tools by features and possibilities, but it's important to notice that creating agents and choosing the right tolling is a range. There are multiple ways to build AI agents in the Microsoft ecosystem. It’s important to pick the right one for your needs and your team’s skill level. It also clarifies when to leverage Azure AI Foundry versus built-in Copilot Studio tools.

Microsoft offers a set of different tools that can build agents by today. While they seem like each other, they are built for different target audiences and levels of expertise. Take a closer look at the overview below. Understanding who needs to create and maintain these agents, also shows us, which Knowledge sources (= data) we need to prepare for our Agents. Beside the tools in the list below, there are even more pro-code solutions to build agents like M365 Agents Toolkit, Visual Studio Code, Agent SDK and more. All our data preparation steps´ apply for them as well, since they access the same data like other agents do.

Source: https://www.egroup-us.com/news/microsoft-copilot-ai-integration/

Pre-Built (ootb)

- Copilot Agents

- SharePoint Agents

Knowledge Source

- SharePoint Online

- Your Graph-Knowledge

- Upload

- Web

Capabilities

- ✅ Retrieval

- ❌ Task

- ❌ Autonomous

Makers

- Copilot Studio

Knowledge Source

- SharePoint Online

- Upload

- Web

- Graph Connectors, Custom Connectors

- Power Platform Connectors

- Dataverse

- Azure AI Search

- SQL, MCP

Capabilities

- ✅ Retrieval

- ✅ Task

- ✅ Autonomous

Developers

- Azure AI Foundry

- Azure AI

Knowledge Source

- Azure AI Search

- Azure Blob

- Databases (Cosmos, SQL)

- Azure Data Lake

- Fabric

- (...)

Capabilities

- ✅ Retrieval

- ✅ Task

- ✅ Autonomous

**Step 2: Identify Use Cases and requirements for your platform. **

As you can probably think of, not every platform supports every use case. Agents can be used for simple tasks, like answering questions based on existing knowledge or complex, like automatically generating answers or executing processes. Also, the final UX where and how we want to access those agents is important to decide for a platform.

With these considerations in mind, we usually try to use the easiest solution possible to build our Agent. But also, we need to find the solution that is scalable for further development. But not every Agent needs to built on Agent AI Foundry from the very beginning.

Tip:

If you are not sure where to start to build your Agent, you always can use Copilot Studio and either integrate more Data from Azure AI there and publish it to Microsoft 365 Copilot. So get both "up- and downwards compatibility".

RAG (Retrieval-Augumented Generation) vs. SharePoint vs. Upload

Looking at it the first time, everything seems to be RAG – but there are differences! When you first explore Copilot Agents and its agent capabilities, it’s tempting to assume that all knowledge integration follows the same RAG (Retrieval-Augmented Generation) pattern. While they may all look like RAG from the outside: retrieving documents and generating answers, the way they work under the hood differs significantly. Understanding these differences is essential for choosing the right approach based on your goals, scale, and technical readiness. Here is a short explanation and overview

Manual File Uploads

Manual upload is the simplest way to add knowledge to a Copilot agent. You drag and drop documents directly into the Copilot Studio interface. Microsoft automatically indexes these files and retrieves relevant content during a user query. This is ideal for small pilots and early testing. Also, be aware that the content of the files should be accessible to everyone with access to agent. There is not Permission-Management here that you need to take care of. On the other hand, you will need to manually update these files in the long term if things change. Currently for Copilot Agents you can add up to 20 files manually.

**SharePoint Online **

This method uses Microsoft’s Retrieval API to access content directly from SharePoint Online connected via Graph Connector. The agent retrieves the most relevant content live at query time, respecting existing Microsoft 365 permissions. Content can be SharePoint sites, document libraries, folders or files. It’s dynamic, secure, and well-suited for scaling across departments or business units without managing your own infrastructure. Building up on the existing infrastructure, we are using the built-in security model from SharePoint with is a huge benefit compared to other knowledge options. Departments can easily update the files and that will be reflected within the agent. That means if two users with different access levels ask the agent, one might get an answer from a certain file while another user (without access) would not – which is exactly the behavior we want.

Note: SharePoint Lists are currently a not supported knowledge-type, so you can not index them out of the box (Q3 2025)

Custom RAG (Self-Managed)

In a classic RAG setup, you build and manage the entire retrieval pipeline yourself. That includes document preprocessing, chunking, embedding, storing in a vector database, and retrieving the top matches at query time. This gives you full control over how content is processed and retrieved, but it also brings complexity and maintenance overhead. It’s best suited for advanced use cases that require customization beyond what Microsoft’s managed services offer. This is not an in-built feature in Copilot or Copilot Studio; we would do this in Microsoft Azure.

A example when to use RAG could be for instance, If you needed to integrate an AI agent with a proprietary database or thousands of PDFs stored outside of Microsoft 365, and apply custom filters, a self-managed RAG might be necessary – but this requires significant effort.

source: https://learn.microsoft.com/en-us/azure/search/retrieval-augmented-generation-overview?tabs=docs

What to Choose and When

While all three approaches involve retrieving content to support language generation, only the custom self-managed solution qualifies as “true RAG” in the technical sense. For most organizations starting out, manual uploads or SharePoint connections are significantly easier and faster to implement. They provide strong results with minimal setup - and they let teams focus on use case design and adoption, rather than infrastructure.

A general advice from my side in this point:

Try to build the agents as close to your data as possible

Example: If your data is stored in large SQL databases or external CRM systems, a SharePoint Agent will not do the job. If we have all our knowledge in SharePoint, SharePoint Agents or Copilot Agents might be a good start.

Custom RAG should be considered only when your needs go beyond what the managed options can provide, not as the default starting point. A manual upload is great for the first pilot or for small pilots with limited and specific knowledge that is not often updated. In many scenarios we would just use a SharePoint library or site with the agent. Because of this, we are focusing on a scenario looking like that:

Microsoft 365 Copilot & Copilot Agents: Security & Compliance out of the box

Secure cloud infrastructure is the bedrock for enterprise AI. Microsoft provides the most secure framework possible for our Agents by putting them in context of Microsoft 365 Copilot. Every organization can trust their existing Security Framework based on Conditional Access and Multi-Factor authentication for access and their existing Governance Framework based on Microsoft Purview.

Agents that are used in M365 Copilot or published from Copilot Studio as a Teams Chatbot are only accessible within our tenant boundaries. That means we get the same level of security for these applications that we already have.

In addition to that, Microsoft offers several technical and organization commitments gathered as we call it "Enterprise Grade Data Protection".

Microsoft 365 Copilot: Enterprise Data Protection (EDP) for Prompts and Responses

- Contractual Protection: Prompts (user input) and responses (Copilot output) are protected under the Data Protection Addendum (DPA) and Product Terms. These protections are the same as those applied to emails in Exchange and files in SharePoint.

- **Data Security: **Encryption at rest and in transit, Physical security controls, Tenant-level data isolation

- Privacy Commitments Microsoft acts as a data processor, using data only as instructed by the customer. Supports GDPR, EU Data Boundary, ISO/IEC 27018, and more.

- Access Control & Policy Inheritance: Copilot respects:** **Identity models and permissions, Sensitivity labels, Retention policies, Audit settings, Admin configurations, AI & Copyright Risk Mitigation and Protection against: Prompt injection, Harmful content, Copyright issues (via protected material detection and Customer Copyright Commitment)

- **No Model Training: **Prompts, responses, and Microsoft Graph data are NOT used to train foundation models. **Copilot Agent's with SharePoint Online-Knowledge: **

- Permission & Sharing Model: Agents with SharePoint Online access always respects the permissions of the associated SharePoint site. That means, on one hand, you need to ensure that everyone who should have access has at least read permissions on the site; on the other hand, you must be vigilant about not granting unnecessary permissions that could expose sensitive information to unauthorized users. Properly configuring permissions is essential, as Copilot Agents will only be able to access and surface content that the querying user is permitted to see. Additionally, leveraging Microsoft Purview information protection ensures that sensitivity labels and data loss prevention (DLP) policies persist with the content

- Persistent Labels & DLP: Enable Microsoft Purview information protection so that sensitivity labels persist with content. Copilot agents inherit labels on source documents. Meaning if a file is classified “Confidential,” any AI-generated content or document from now on, will carry that label forward. This persistent label inheritance works in tandem with Data Loss Prevention policies to prevent AI from inadvertently exposing protected data. In practice, that means even if Copilot summarizes a sensitive file, the summary will be handled as sensitive too. This is something outstanding we do not find outside of Microsoft 365 and we won't see any AI Agent that is able to deeply integrate like this in the Microsoft 365 ecosystem!

Best Practices to prepare further SharePoint Online for Agent use

To prepare SharePoint Online for effective use with Copilot Agents, follow these best practices:

**Dedicated SharePoint Site: **

First, create a dedicated SharePoint site or a specific folder designed exclusively for your Copilot Agent’s knowledge base. This approach helps minimize issues related to oversharing and reduces the risk of users accidentally uploading sensitive or irrelevant files to the agent’s accessible repository. If you decide to use an existing SharePoint site, carefully review its contents to ensure that no confidential or sensitive information is stored there that should not be discoverable by the agent.

Granting Access

It is also important to ensure that all intended users have the necessary read permissions to access the site or folder. If you need to grant access manually, Ensure all intended users have read access to the site (for example, by adding them to the SharePoint site’s Visitors group or an appropriate Azure AD security group*) *to simplify the process and prevent accidental permission misconfigurations.

Prepare Files

When preparing documents for use with Copilot Agents, remember that the AI currently **cannot interpret embedded images within **files. Therefore, add descriptive image captions or alternative text to help ensure that important visual information is not lost. For text-heavy documents, make sure When summarizing or referencing content, keep the total to a maximum of 1.5 million words or 300 pages to ensure Copilot works effectively.

For Excel files, organize your data so that each file focuses either on numbers or on text, as mixed-content tables tend to yield less accurate results. Agents also respond most reliably to queries when the relevant data is contained within a single sheet of the workbook.

Agents respond best to Excel data when it’s contained in one sheet.

Example: If you have a large customer feedback survey stored in a single Excel file, separate the quantitative data (such as ratings and numerical responses) from the qualitative data (such as free-text feedback) into two different sheets. This method allows you to use tools like Python and Excel formulas to efficiently analyze the numerical data (e.g., calculate averages, sort results, determine confidence levels), while leveraging M365 Copilot’s sentiment analysis features to gain insights from the text-based feedback.

File Limitations

Finally, be aware of the file types and size limitations supported by Copilot Agents and Copilot Studio. The following table outlines current support:https://learn.microsoft.com/en-us/microsoft-365-copilot/extensibility/copilot-studio-agent-builder-knowledge#file-size-limits

Also acknowledge those best practices Microsoft has shared on document lengths: https://support.microsoft.com/en-gb/topic/keep-it-short-and-sweet-a-guide-on-the-length-of-documents-that-you-provide-to-copilot-66de2ffd-deb2-4f0c-8984-098316104389

| File type | SharePoint Online - Limit | Manual Upload - Limit |

|---|---|---|

| .doc | 150 MB | 100 MB |

| .docx | 512 MB | 100 MB |

| .html | 150 MB | not supported |

| 512 MB | 100 MB | |

| .ppt | 150 MB | 100 MB |

| .pptx | 512 MB | 100 MB |

| .txt | 150 MB | 100 MB |

| .xls | 150 MB | 100 MB |

| .xlsx | 150 MB | 100 MB |

Currently unsupported Filetypes in SharePoint Online: Officially everything else that is not listed there, is not officially supported.

Certain file types, such as CSV files, may function adequately even though they are not officially supported because they closely resemble plain text formats. However, most other file types—particularly container files like CAB, EXE, ZIP, as well as image, video, and audio formats such as PNG, IMG, MP3, and MP4—are not supported at this time.

**Final thoughts **

By following these recommendations, you can ensure that your Copilot Agents have access to well-structured, secure, and high-quality data, maximizing their usefulness and minimizing the risk of accidental data exposure. Investing time in preparing your SharePoint environment sets a strong foundation for successful AI agent deployment and adoption within your organization.

In fact many of our "Build-an-Agent" projects starting exactly with that. Not building the agent, but preparing the infrastructure and knowledge that we have a good quality data to use for the AI, because the Agent is only as good as the system beneath it!

Want to learn more?

Join our webcast to learn how to prepare your M365 environment for Copilot Agents from infrastructure and data governance to platform decisions.

Register now